- Feb 28, 2023

- 6 min

AI-driven DX: Generating images with artificial intelligence

Magnolia in action

Take 12 minutes and a coffee break to discover how Magnolia can elevate your digital experience.

Artificial intelligence (AI) is making its impact on various industries, including the world of digital experiences. In this interview with Tobias Kerschbaum, a Solution Architect at Magnolia, we will explore how Magnolia enables customers to leverage its potential.

Tobias shared with me the fascinating example of how an AI called DALL-E generates images from textual descriptions. In our conversation, Tobias explained how the integration works and discussed some of the future improvements that he is working on. Read on to learn more about this exciting development in AI and digital experience creation.

AI use cases in DX

Sandra: Tobias, you are a Solution Architect at Magnolia and work for our Professional Services team. I know that you’ve recently worked on leveraging artificial intelligence. Can you give us a few examples of how AI is being used to help create digital experiences?

Tobias: We use AI for tasks such as text classification and auto-tagging of images. Additionally, we use it to optimize text. For instance, if you use our integration with WordAI, content authors can write text and ask it for suggestions on how to improve the copy with the click of a button. It’s remarkable how a simple thing like that can make a content author's life easier.

And I anticipate that it will have even more impact in the future. This is especially true when it comes to creating content, particularly images, which is what we're currently working on.

XR for real - how Extended Reality is coming to life

How real is Virtual Reality today and what innovations are on the horizon? Read the interview with Magnolia’s Jan Schulte to learn more.

Image generation with DALL-E in Magnolia

Sandra: Can you tell me more about what you’re working on?

Tobias: The idea has been around for a few months or years, but now there is a new version of the DALL-E API. This one is particularly good.

We see a big benefit from image generation, and we have already discussed this with a few customers. They love the fact that they can create images instantly and don't have to ask a designer every time they need to create or change an image. They can simply describe what they need, click a button, and then choose from several images. This is particularly useful when they need an image for their website quickly.

Another benefit is creating images in a certain context or style. In the latest version of our integration, we are able to use an image as a base and then change it based on specific instructions. This ensures that the new image matches the style of the base image.

Sandra: You mentioned the DALL-E API. What is DALL-E?

Tobias: DALL-E is an AI that can generate images from textual descriptions. It is pronounced like the painter Salvador Dalí. DALL-E 2 is the second version of the API and has improved significantly.

It's a paid service from OpenAI that you can try out for free.

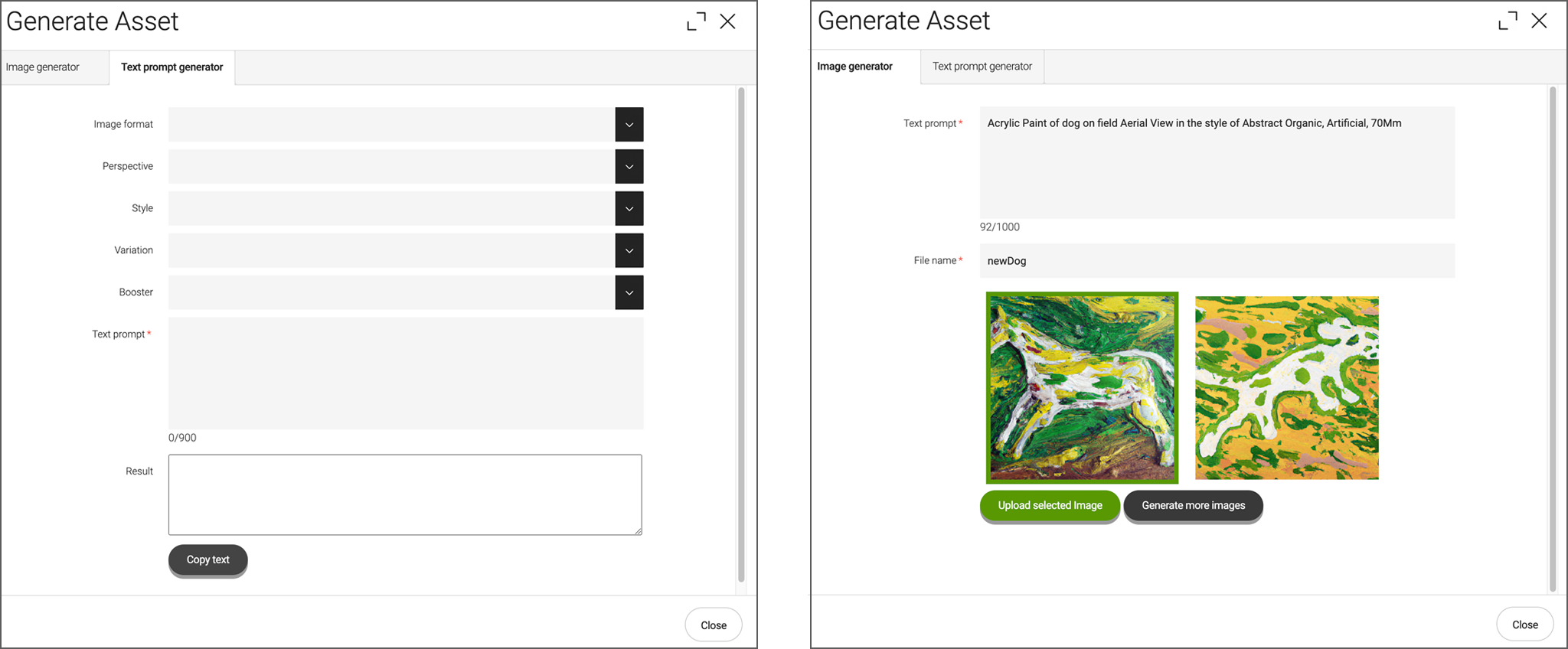

We have integrated it into Magnolia so that authors don't have to leave the platform to download and upload images. They can use the service within Magnolia. We even have a prompt generator to help create the textual description to generate an image, which is called a prompt. It helps in the creation process, as it can be difficult at first to know how to write a prompt to get good results.

Sandra: Very cool. As a marketer, I imagine that I would have access to this feature in my normal UI. Help me understand how this works and how we implement it in Magnolia.

Tobias: The prompt generator is managed by Magnolia and available to content authors in a Magnolia dialog. The AI is an external service that we’re using in the background.

The prompt generator provides a form with drop-down lists and some examples to use as a starting point. For example, you can specify the style, content, and shape of an image. If you want to create images of a specific style frequently, you can also extend the list of prompt criteria using a Magnolia Content App.

For the integration, we created a normal Magnolia dialog that communicates with DALL-E. To generate an image, we take the prompt from the prompt generator and make a REST call to the DALL-E API.

The results are then shown in the dialog. Finally, Magnolia automatically uploads the image you choose to the Magnolia DAM.

Sandra: This creates an image from scratch. You mentioned that DALL-E can also change images. How does that work?

Tobias: The integration has two options: the prompt generator, and a second option where you can use an existing image and make changes as needed. The rest of the process remains the same: we send it to the API, get the results back, choose from the results, and then upload the image to Magnolia.

Sandra: You’ve built this already, and that’s amazing. What’s next?

Tobias: We have identified other areas for improvement, such as increasing the number of generated images beyond the current limit of four. It would be helpful to be able to request additional images if the initial set is not satisfactory, which would trigger the re-generation and provide a larger selection of images to choose from.

Sandra: That's cool. And unlike with a designer, you don't have to be concerned about hurting someone’s feelings when you say, actually, you know what, I don't like the first four images.

Tobias: I think this is a good starting point. We have to do more investigation and talk to clients and how they would want to use the feature. Some might just take it and improve it by themselves if they have additional requirements in their project. The sources are open. Everybody can read through the code and extend it.

Sandra: Speaking of the code. How are you making this available?

Tobias: We’re making it available as a free Magnolia Light Module on Git. You can get access to it via our Marketplace.

Real-life application of DALL-E

Sandra: It sounds incredible, but how useful is it really?

Tobias: I have to admit that, at first, I was a bit hesitant about generating random images. I wasn't sure if it would be useful in real projects. However, when we showed it to the first one or two customers, I was surprised at how excited they were. They immediately saw the potential, which was encouraging.

From the feedback we received from customers and colleagues, it seems that the best way to use the generator is by combining simple text prompts with images. This is because images provide the necessary context for generating the prompt. For instance, if you ask the AI to generate an image of a person facing right instead of left, it can do so easily.

Similarly, you can use DALL-E to extend or modify the image by adding or removing elements. I believe this approach has a lot of potential for future projects. By providing the AI with an image as context, we can generate a lot of useful and interesting content.

Overall, I think this tool will be really helpful for all types of projects.

Sandra: The idea sounds really cool, and could actually be really helpful. As a marketer, I don't know if we could use this, but I would definitely like to give it a try.